What on Earth is AI-Powered Camera?

What on Earth is AI-Powered Camera?

How do AI-Powered Camera works ? And what do they actually are?

The new buzzword in photography the AI-Powered Cameras is becoming so ubiquitous that it’s rare to spot a smartphone without some type of AI nowadays. “AI camera” or “AI-Powered Cameras” is the new hot phrase that audiences keep hearing at the launch of the latest smartphones, especially the middle and high-end ones. For that reason alone, it’s worth knowing what exactly AI in cameras does and how it is benefiting the photography world or is it just a gimmick !! ?

The gist of Artificial Intelligence :

AI is about creating machines capable of solving problems like we do, through reasoning, intuition and creativity.

Artificial intelligence machines indicate human behavior, but what is their exact use?

The AI machines find their application as a connecting point between the real and the virtual world. For example, the AI processes help virtual assistants like Siri and Alexa understand what you are saying. Furthermore, these processes can spot a credit card fraud or spam, in general, if we can teach a computer to think or, at least, learn. It’s generally split into subsets of technology that try to emulate what humans do, such as speech recognition, voice-to-text dictation, image recognition and face scanning, computer vision, and machine learning. Google’s Pixel 3, many of the phone’s best features — whether it’s predictive battery-saving , adaptive brightness or the ability to screen calls — are made better with AI.

But , What’s it got to do with cameras? Computational photography and time-saving photo editing, that’s what. And voice-activation.

Let’s look at the key features of AI-powered cameras.

Face Recognition :

Face Recognition has become a new method of secure authentication for mobile phones. If you are an iPhone X or later owner, you are probably using the face unlock feature. This face unlocking ability is actually an AI program. Aside from expensive iPhones, even the cheaper Android smartphones now come with a face unlock feature. Face unlocking analyses the face of the end-user and remembers it. It even learns about changes in the face, so if you completely shave your long beard or go for a bald summer look after years of dreadlocks, it will still manage to recognize you and unlock your phone if you happen to be its owner. It learns about those changes so that your face doesn’t go unrecognized. In fact, face recognition is fast becoming the de-facto authentication method for biometrics applications.

But How do Face Recognition in smartphone or more famously “AI Face Unlock” works ?

Facial recognition is a category of biometric software that maps an individual’s facial features mathematically and stores the data as a faceprint. The software uses deep learning algorithms to compare a live capture or digital image to the stored faceprint in order to verify an individual’s identity.

High-quality cameras in mobile devices have made facial recognition a viable option for authentication as well as identification. Apple’s iPhone X, for example, includes Face ID technology that lets users unlock their phones with a faceprint mapped by the phone’s camera. The phone’s software, which is designed with 3-D modeling to resist being spoofed by photos or masks, captures and compares over 30,000 variables.

What is Face ID?

Face ID a form of biometric authentication. Rather than a password (something you know) or a security dongle or authentication app (something you have), biometrics are something you are. Fingerprint recognition is also a biometric.

Instead of one or more fingerprints, as with Touch ID, Face ID relies on the unique characteristics of your face.

Anddd……… ??? How Face ID works?

Face ID is designed to confirm user attention, provide robust authentication with a low false match rate, and mitigate both digital and physical spoofing.

The TrueDepth camera automatically looks for your face when you wake iPhone X by raising it or tapping the screen, as well as when iPhone X attempts to authenticate you to display an incoming notification or when a supported app requests Face ID authentication. When a face is detected, Face ID confirms attention and intent to unlock by detecting that your eyes are open and directed at your device; for accessibility, this is disabled when VoiceOver is activated or can be disabled separately, if required.

Once it confirms the presence of an attentive face, the TrueDepth camera projects and reads over 30,000 infrared dots to form a depth map of the face, along with a 2D infrared image. This data is used to create a sequence of 2D images and depth maps, which are digitally signed and sent to the Secure Enclave. To counter both digital and physical spoofs, the TrueDepth camera randomizes the sequence of 2D images and depth map captures, and projects a device-specific random pattern. A portion of the A11 Bionic processor’s neural engine — protected within the Secure Enclave — transforms this data into a mathematical representation and compares that representation to the enrolled facial data. This enrolled facial data is itself a mathematical representation of your face captured across a variety of poses.

Facial matching is performed within the secure enclave using neural networks trained specifically for that purpose. We developed the facial matching neural networks using over a billion images, including IR and depth images collected in studies conducted with the participants’ informed consent. We worked with participants from around the world to include a representative group of people accounting for gender, age, ethnicity, and other factors. We augmented the studies as needed to provide a high degree of accuracy for a diverse range of users.

Face ID is designed to work with hats, scarves, glasses, contact lenses, and many sunglasses. Furthermore, it’s designed to work indoors, outdoors, and even in total darkness. An additional neural network that’s trained to spot and resist spoofing defends against attempts to unlock your phone with photos or masks. Face ID data, including mathematical representations of your face, is encrypted and only available to the Secure Enclave. This data never leaves the device. It is not sent to Apple, nor is it included in device backups. The following Face ID data is saved, encrypted only for use by the Secure Enclave, during normal operation:

The mathematical representations of your face calculated during enrollment.

The mathematical representations of your face calculated during some unlock attempts if Face ID deems them useful to augment future matching.

Face images captured during normal operation aren’t saved, but are instead immediately discarded once the mathematical representation is calculated for either enrollment or comparison to the enrolled Face ID data.

huff…

Another aspect of AI Powered Camera is AI Software :

Ever heard about :

Google Lens ?

Playground ?

Night Sight?

Now Playing feature?

Pixel Perfect Portrait with Single Camera?

Super Res Zoom?

Wellll… Yes, you guessed it right , we are talking about the most Impressive Guys in the Town .. Google Pixel Phones .

The answer, for Google, was clear: Anything you can do in AI, we can do better. The challenge was “ not to launch gimmicky features, but to be very thoughtful about them, with the intent to let Google do things for you on the phone, ” said Mario Queiroz, vice president of product management at Google.

At the same time, being thoughtful about using AI in photography also means being careful not to insert biases. This is something that Google has had to reckon with in the past, when its image-labeling technology made a terrible mistake; underscoring the challenges of using software to categorize photos. Google doing more things for you, means it’s making more decisions around what a “good” photo looks like.

How Do Google AI Software works ?

As we are talking about cameras specific only lets stick to it and cover only those areas for now,

Super Res Zoom :

As Google states —_ “The Super Res Zoom technology in Pixel 3 is different and better than any previous digital zoom technique based on upscaling a crop of a single image, because we merge many frames directly onto a higher resolution picture. This results in greatly improved detail that is roughly competitive with the 2x optical zoom lenses on many other smartphones. Super Res Zoom means that if you pinch-zoom before pressing the shutter, you’ll get a lot more details in your picture than if you crop afterwards.”_

it uses a technique called dribble, which captures and later combines multiple frames taken from slightly different angles.

It makes use of the minor movements from when your hand shakes while holding your phone to capture multiple frames. If the phone is placed on a tripod, the camera jiggles to mimic these movements and capture additional frames.

Through improved algorithms, Google also overcame problems like excessive noise, ghosting and motion blur to achieve a good photo. The company claims this method gives a satisfactory result for photos with 2x or 3x zoom captured in ample light.

Night Sight :

Night Sight is a new feature of the Pixel Camera app that lets you take sharp, clean photographs in very low light, even in light so dim you can’t see much with your own eyes. It works on the main and selfie cameras of all three generations of Pixel phones, and does not require a tripod or flash. In this article we’ll talk about why taking pictures in low light is challenging, and we’ll discuss the computational photography and machine learning techniques, much of it built on top of HDR+, that make Night Sight work.

Left: iPhone XS (full resolution image here). Right: Pixel 3 Night Sight

Night Sight uses a similar principle, although at full sensor resolution and not in real time. On Pixel 1 and 2 we use HDR+’s merging algorithm, modified and re-tuned to strengthen its ability to detect and reject misaligned pieces of frames, even in very noisy scenes. On Pixel 3 we use Super Res Zoom, similarly re-tuned, whether you zoom or not. While the latter was developed for super-resolution, it also works to reduce noise, since it averages multiple images together. Super Res Zoom produces better results for some nighttime scenes than HDR+, but it requires the faster processor of the Pixel 3.

By the way, all of this happens on the phone in a few seconds. If you’re quick about tapping on the icon that brings you to the filmstrip (wait until the capture is complete!), you can watch your picture “develop” as HDR+ or Super Res Zoom completes its work.

Single Camera Portrait Mode :

Historically, Portrait Mode-style images required an SLR camera with a large lens, a small aperture and a steady photographer to capture the subject in focus. But today, roughly 85% of all photos are taken on mobile, which offers an interesting set of challenges: a small lens, a fixed aperture and a photographer who might not be so steady. To recreate this effect, research and hardware teams at Google worked hand-in-hand to develop a Portrait Mode process that’s almost as striking as the photos it takes.

AI is central to creating the Portrait Mode effect once an image is captured. The Pixel 2 or later Pixel phones contains a specialized neural network that researchers trained on almost a million images to recognize what’s important in a photo. “Instead of just treating each pixel as a pixel, google try to understand what it is,” By using machine learning, the device can make predictions about what should stay sharp in the photo and create a mask around it.

Pixel Camera Product Manager, Isaac Reynolds, explains, “When we picked the hardware, we knew we were getting a sensor where every pixel is split into two sub-pixels. This architecture lets us take two pictures out of the same camera lens at the same time: one from the left side of the lens and one through the right. This tiny difference in perspective gives the camera depth perception just like your own two eyes, and it generates a depth map of objects in the image from that.”

This allows the software to provide the finishing touch — a realistic blur. Using the depth map, the Portrait Mode software replaces each pixel in the image with beautifully blurry background known as bokeh. The result is a high quality image that rivals professional quality with just a quick tap. And as big as this breakthrough was in computational photography, it was an even bigger breakthrough for selfies. Now, the front-facing camera can capture that professional-quality shot from anywhere, with a quick point, pose, and shoot.

AI Computational Photography :-

Computational photography is a digital image processing technique that uses algorithms to replace optical processes, and it seeks to improve image quality by using machine vision to identify the content of an image. “It’s about taking studio effects that you achieve with Lightroom and Photoshop and making them accessible to people at the click of a button,” says Simon Fitzpatrick, Senior Director, Product Management at FotoNation, which provides much of the computational technology to camera brands. “So you’re able to smooth the skin and get rid of blemishes, but not just by blurring it — you also get texture.”

In the past, the technology behind ‘smooth skin’ and ‘beauty’ modes has essentially been about blurring the image to hide imperfections. “Now it’s about creating looks that are believable, and AI plays a key role in that,” says Fitzpatrick. “For example, we use AI to train algorithms about the features of people’s faces.”

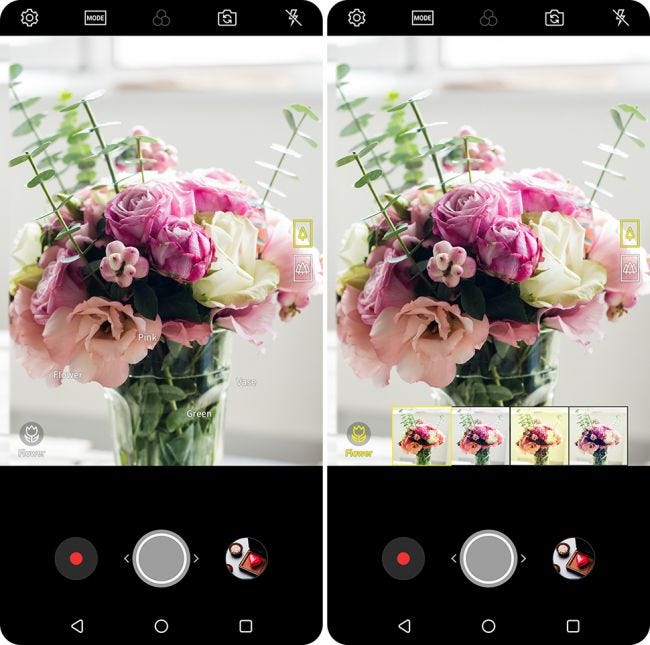

LG’s V30S ThinQ phone allows the user to select a professional image on its Graphy app and apply the same white balance, shutter speed, aperture and ISO. LG also just announced Vision A, an image recognition engine that uses a neural network trained on 100 million images, which recommends how to set the camera. It even detects reflections in the picture, the angle of the shot, and the amount of available light.

Special Mention — Google Clips :

Google Clips is smart enough to recognize great expressions, lighting and framing. So the camera captures beautiful, spontaneous images. And it gets smarter over time.

Google Clips

Google is also using AI on its new Google Clips wearable camera, which uses AI to only capture and keep particularly memorable moments. It uses an algorithm that understands the basics about photography so it doesn’t waste time processing images that would definitely not make the final cut of a highlights reel. For example, it auto-deletes photographs with a finger in the frame and out-of-focus images, and favours those that comply with the general rule-of-thirds concept of how to frame a photo.

Hope you enjoy the article! Leave a comment below or inbox me in facebook if with any questions / suggestions!