Understanding Android Camera Architecture - Part 2

Android is the most popular mobile operating system in the market today. Towards the end of the last decade, the camera has emerged as one of the most important factors that contribute towards smartphone sales and different OEMs are trying to stay at the top of the throne. Smartphone cameras are built very similar to digital cameras in a compact package. With high-end processing capabilities it has taken a big leap and is competing with digital cameras today. In this post, I’ll be covering the general hardware architecture of modern Android Camera.

This is Part 2 of the article series on explaining camera architecture. In the part 1 I explained about the basics and a few hardwares like Lens, Shutter, Sensor and Flash. You can read the original full article at blog.minhazav.dev/android-camera-hardware-explained or the part 1 here.

Image Signal Processor

An image signal processor, also called image processing engine, is a specialized digital signal processor used for image processing in digital cameras, mobile phones or other devices.

Taking light from the camera sensor and converting it to beautiful images requrie a complicated process involving large amount of math and processing. ISP is specialized hardware capable of performing these steps in energy efficient way. Some of the algorithms that are run on ISP are:

- Auto Focus, Auto White Balance: Auto focusing ensures the resulting images are sharp. There are different types of auto-focus algorithms that an ISP can implement. This is explained in detail in my former article - android camera subsystem. ISP also monitors and adjusts color and white balance as well as exposure in real-time so that the pictures don’t come out too dark or bright or off colored. All of this happens even before the shutter button is clicked.

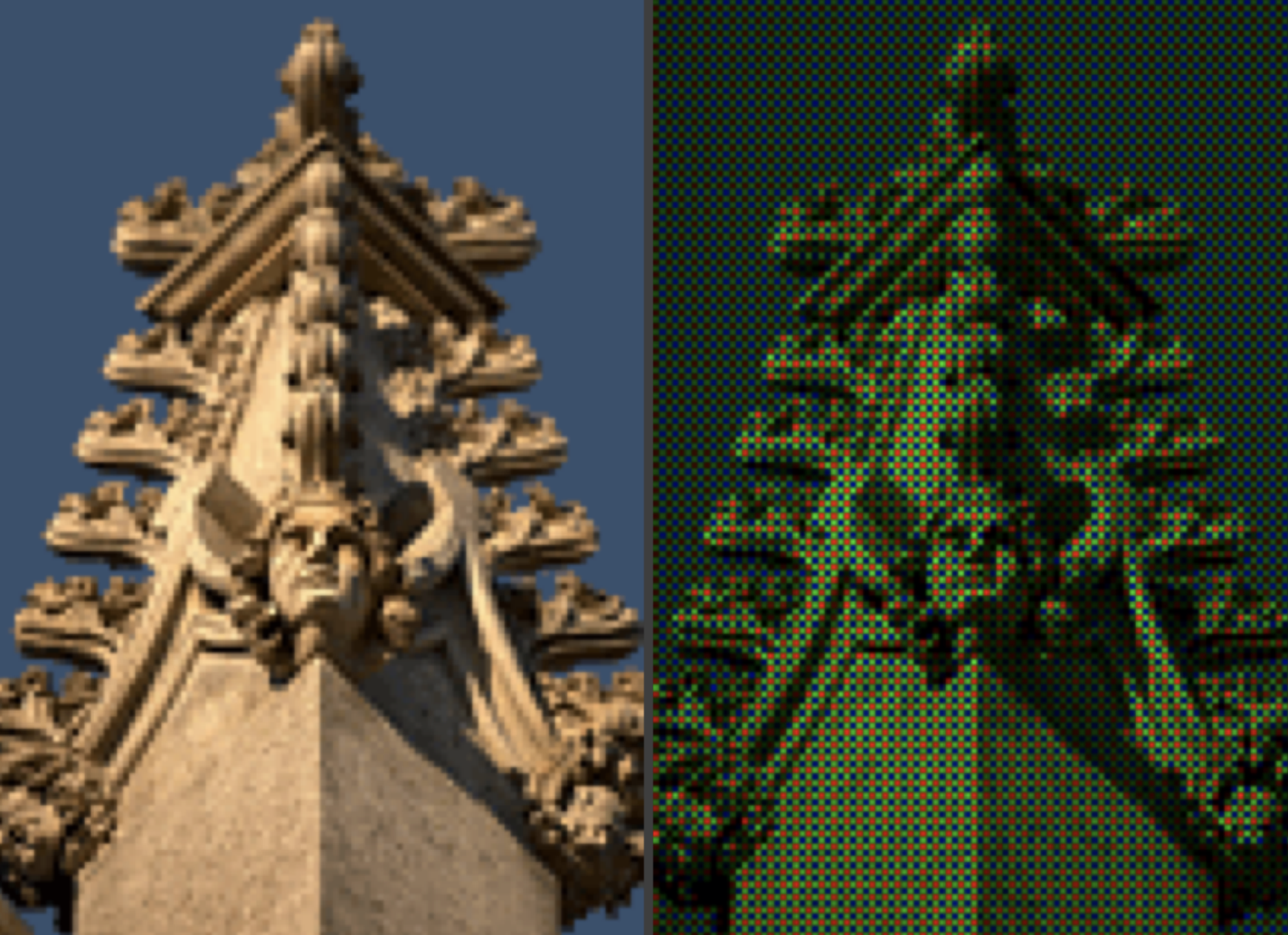

- Demosaic: The CMOS sensors don’t sense RED, BLUE and GREEN for each pixel. It senses one color for each pixel and the RGB value for each pixel is guessed by ISP. This is called demosaicing and it’s probably the primary task taken care of by an ISP.

Figure: Image as captured by the sensor (Right) and Image produced after processing (Left). - Shading correction and geometric correction: Once an ISP has RAW image data it runs algorithms to fix lens shading or curvature distortion.

Figure: Image before geometric correction. - Statistics: ISPs can efficiently perform statistical operations on incoming signals like - histogram, sharpness maps, etc.

ISP is generally onboard SoC but can come discretely as well. An ISP is essentially one of the major limiters of how many MegaPixels a camera (or smartphone) can efficiently process. For example, Qualcomm’s latest Spectra 380 ISP is engineered to support up to 48 MP or two 22 MP sensors at once.

Digital Singal Processor

DSP is a specialized microprocessor chip, with its architecture optimized for the operational needs of digital signal processing.

The operations performed by a DSP can be executed by general-purpose processors but DSP can perform them more efficiently and with higher performance. Some of the operations performed efficiently using DSP are:

- Matrix Operations

- Convolution for filtering

- Dot product

- Polynomial evaluation

- Fast Fourier transformation

Efficient & performant processing of vector math by DSP has made it a great choice of SoC for many machine learning and image processing operations making it the ideal choice for smartphone cameras. They are often called Neural Processing Unit, Neural Engine or ML Processors.

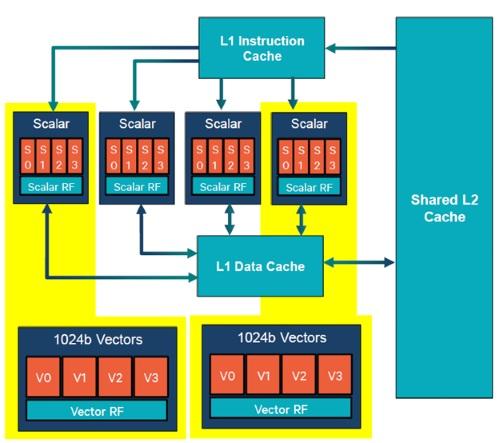

Qualcomm’s Hexagon is a brand for a family of DSPs with 32-bit multithreaded architecture. The architecture is designed to provide high performance with low energy usage.

Figure: The Hexagon DSP architecture in the Snapdragon 835. Source: Qualcomm.

The Snapdragon 845’s Hexagon 685 DSP can handle thousands of bits of vector units per processing cycle, compared to the average CPU core’s hundreds of bits per cycle. Some other SoCs with specialized hardware for ML processing have been included in smartphones by different OEMs like Huawei and Apple.

Specialized hardware in the latest smartphones

OEMs have been introducing specialized hardware primarily to boost machine learning capabilities which are being extensively used in image processing. Initially, these were being done in the software layer executed on CPU or GPU. But specialized low-level hardware have helped reduce the latency of such processing steps to provide support for features like live HDR (HDR on viewfinder), 4K video recording with HDR, etc. Two outstanding OEMs who introduced such hardware were Google and iPhone.

Google Pixel Visual Core

Google introduced a secret chip in Google Pixel 2 and Pixel 2 XL alongside main processing components to enhance image processing capabilities. According to Google, this extra chip brought in around 5X improvement in HDR+ processing time at 1/10th power consumption. Pixel Visual core was designed to handle complex machine learning algorithms related to image processing. It was reintroduced in the Pixel 3 series as well.

Apple Iphone bionic chips

Apple has been bringing in A-series chips like A13 Bionic chip introduced in iPhone 11. They claim to pack CPU, GPU and a neural engine for boosting machine learning performance. In their latest chip, Apple claimed to have boosted matrix multiplication to 6X faster which is the core of many ML operations. Apple says it’s using ML in iPhone 11’s camera to help process their images.

CPU and GPU

After getting the image from the camera in RAW, YUV or JPEG format the camera application can runt the image through more general image processing algorithms which are usually run on general-purpose processors like CPU or GPU.

In the later version of Android, Reprocessing APIs were introduced that allow the software layer to submit the image back to framework then HAL for running certain image processing algorithms on them in specialized hardware mentioned above for better performance. I’ll be explaining this in detail in a separate architecture.