Some core properties of a camera sensor - what makes a good camera sensor?

Most of us today have a smartphone we can’t keep our hands off of. And one of the most important features of the smartphone, is the camera. And many photography enthusiasts may have additional equipments like a DSLR or a mirrorless camera or even a GoPro or a drone. So how do we decide if a camera is good or bad? It depends on several components - like the main ‘sensor’ in the camera, the software stack (that’s usually associated with manufacturing company), the processor which dictates the performance of the camera and so on.

In this article I’ll be explaining some less known properties of a camera sensor that could be used to evaluate the sensors used in the camera.

I don’t think this will help you select the next smartphone as most of this information was not handed out by the smartphone manufacturer. This will instead give you a little more insight into the metrics relevant at the sensor level.

Figure: A CMOS sensor in a mirrorless camera.

Basics of a camera sensor

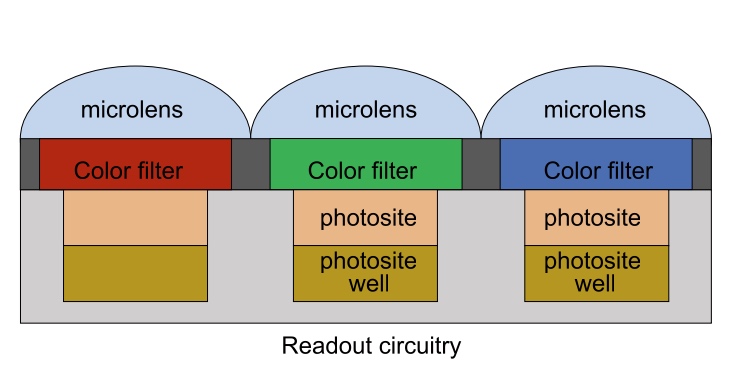

Figure: Simple high level diagram of camera sensor pixel level circuitry.

Color filters

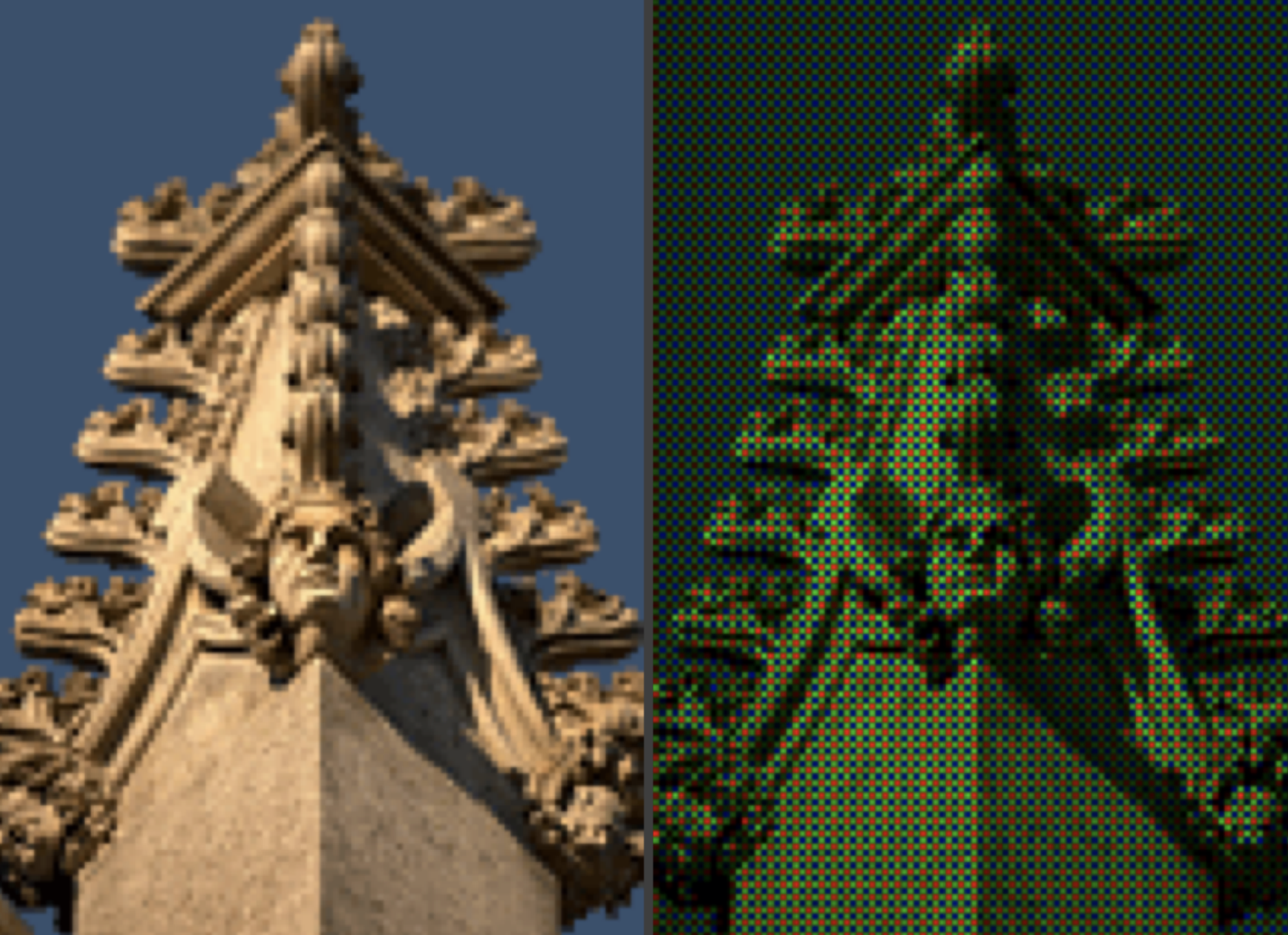

Usually sensors have a 2D array of pixels each collecting a certain color / wavelength of light. A typical photo sensor detects intensity of light with little or no wavelength specificity and thus cannot separate the colors in the incoming light. The color filters only allow light in certain wavelength range to pass. A typical pattern is to have the color filters in RGBG pattern, such that a four pixel block has - one red, one blue and two green color detecting pixels. There are other kinds of filter patterns as well - you can read more about them here.

Photosite & Photosite well

Photosite is composed of photo sensitive materials (or photodiodes) which release electrons upon receiving photons. When the shutter button is clicked the electron accumulation begins in the photosite well and collection stops when the shutter closes again. This duration is commonly referred to as exposure time.

Microlens

Microlens a.k.a lenslet help photosite collect more light by bending the rays towards the photosensitive area thus increasing the amount of photons received.

Readout circuitry

The accumulated electrons are sensed and amplified based on sensor sensitivity settings and converted to discrete signals later in Analog to Digital Conversion (ADC) stage. The discrete signals then undergo a series of image processing steps and usually output is generated for user consumption in 8-bit format. You can read more about these steps in another article (although scoped to Android).

In this post, I'll be describing various algorithms implemented in the android camera subsystem that is consistent across OEMs which are used to produce stunning images that we capture from camera applications.

In this post, I'll be describing various algorithms implemented in the android camera subsystem that is consistent across OEMs which are used to produce stunning images that we capture from camera applications.

[ Read more ]

Different quality metrics

Moving on to the crux of the articles, some very basic yet not so commonly known quality metrics of a camera sensor. All of these should be applicable to both CCD and CMOS sensors.

Quantum Efficiency

Quantum Efficiency is defined as:

QE = # of electrons / # of photons

Since the energy of photon is inversely proportional to its wavelength QE is measured over a range of different wavelengths to characterize a device’s quantum efficiency at each photo energy level. A photographic film typically has a QE of less than 10% while new commercial CCD based sensors can have QE of over 90%.

Photosite full well capacity

We can assume sensors mostly have a linear response to incident photons (with the slope of the line being the quantum efficiency), but at a certain point we can assume that the photosite well can hold no more electrons and call it saturated. This capacity of number of electrons a photosite can hold before getting saturated can be called full well capacity.

Two factors that contributes to this are:

Pixel pitch: The length of one side of a square pixel (typically 1-20 micrometer).Percentage fill factor: The percentage of the pixel size taken up by photosensitive material as it determines the actual area receiving the incident light.

However, it’s useful to note that microlens play a role here, microlenses can bend the light towards the photosensitive material thus increasing the effective area receiving light. Thus, it’s useful to evaluate effective fill factor instead of actual fill factor.

So, what makes a camera sensor good?

There are of-course more components, but if you are considering the above-mentioned properties you want better Quantum Efficiency and full well capacity to take high SNR and high dynamic range shots.

References

This article has been imported from my original blog - blog.minhazav.dev.