“OK, ML Kit, how smart are you?”

An intro to the ML Kit and why you should learn more about it.

“Learning ML is hard”, they said.

Yes, that’s true.

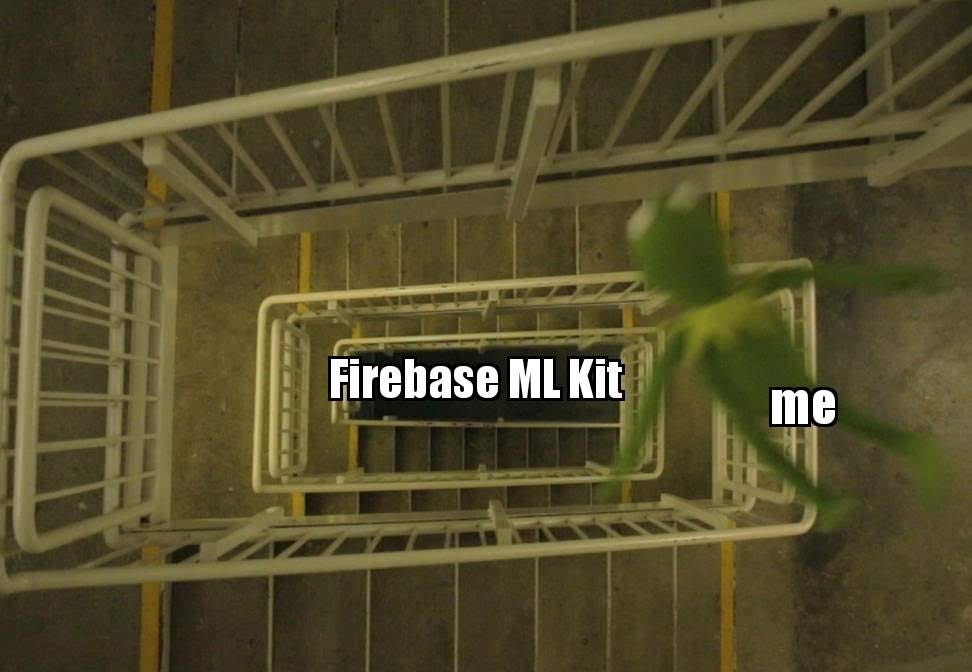

I never wanted to pursue Machine Learning in depth (braces self for those “How can you say that?! You know ML is the future, right?” comment), mostly because my interests lie in UX design and mobile app development.

I just love building apps that have a good balance of minimal elegant design with great functionality and spend most of my time drawing out mock-up designs of apps I wanted to build, with nothing but a piece of paper and a pencil.

It’s quite obvious now that we see ML being used all around us, and one very interesting and developer-oriented occurrence is in the new **ML Kit** API that was released by Google as a part of the Firebase product family. 🔥

This article is a monologue of my thoughts on Firebase ML Kit, a quick walkthrough of the features and why you should start learning about it now.

“But Bapu, do you even have the faintest idea of ML?”

Good question!

I don’t.

For someone who has absolutely zero knowledge of Machine Learning, I find it quite daunting to start learning how it works, let alone begin using it in a mobile app.

I wanted to check out the official docs for the ML Kit, out of curiosity, and then decide how to go from there. I also watched the introductory video, which got me more hooked onto the ML Kit and made me want to explore it in detail. Here’s the video, in case you missed it:

<center><iframe width="560" height="315" src="https://www.youtube.com/embed/ejrn_JHksws" frameborder="0" allowfullscreen></iframe></center>

After going through the introduction and a quick walkthrough of the features that were released so far, I fell in love with ML Kit and couldn’t get back up. ❤️

I’ll be writing a multi-part article about how to use ML Kit in your mobile app, right after this introductory article about the API.

For now, let’s get into what the ML Kit does and take a brief tour of the features.

Features in ML Kit

As of April 2019, here is a list of features that ML Kit currently provides:

-

Text recognition 🕵️♂️

-

Face detection 👦

-

Barcode scanning 🔎

-

Image labeling 🏞

-

Landmark recognition 🗼

-

Language detection 📝

-

Smart reply ✉️

-

Using custom TensorFlow Lite models ☁️

Note: Keep in mind that ML Kit is currently in Beta, so all features may not work as expected. ⚠️

Additionally, it’s also important to note that some of these features can be configured to work in two different modes:

-

the on-device mode (a.k.a. the offline mode), and

-

the cloud mode (a.k.a. the better results, network-only mode).

**Yet another note: **The cloud mode uses Cloud Vision API, which will require you to get the Firebase Blaze plan. Once you get this plan, the first 1,000 calls to the API are free, after which you’ll have to pay. 💰

Here is a summary of which features can be used on-device and which ones can be used on the cloud:

Let’s dive into each of these features one-by-one.

Text recognition

The idea here is pretty simple:

You take a picture of something that has text in it and ML Kit extracts any text that is present in that picture for you as a String (or list of Strings). 🕵️♂️

Click a pic -> Get the text in the pic

Here are a few examples of where this feature could come in handy:

If you’re building an app that needs to be scanned,

-

a document and get the text in the document, or

-

a contact card and save the contact details onto their phone.

These are just two examples; feel free to explore more use cases.

The on-device mode only lets you detect text that is written in Latin. Here’s where another neat little aspect of this feature comes in handy: if you use the cloud-based mode, you can also detect text that is written in the non-Latin script as well.

You can find the entire list of supported languages here.

Face detection

The face detection feature of the ML Kit API lets you scan for faces in your picture. 👦

As of now, only human faces can be detected (my condolences to my puppies, kittens and all aliens across the universe 😌).

Click a pic -> Get all faces in the pic

Once a face is detected, you can:

-

get the coordinates of the eyes, ears, cheeks, nose and mouth,

-

get the contours of the face, which is the outline and shape of the face,

-

detect facial recognition (Is the face smiling or not? Are the eyes closed or open?),

-

uniquely identify each face in the picture, so each face has a unique ID.

There are two modes in the face detection feature: **fast **and accurate. As you can tell, they’re quite self-explanatory as to how the feature works when configured with either of these!

There’s also an option to set a minimum face size, so you can set a threshold below which the ML Kit API won’t detect the face.

Barcode scanning

Okay, when I say “barcodes”, most people think of this:

But in ML Kit, barcodes are kinda synonymous with QR codes as well, which look like this:

Firebase ML Kit supports the scanning and extraction of information from both of these!

Click a pic -> Get info from the scanned barcode/QR code

Just click on a picture that has one or more barcodes/QR codes in it and voilà! You can get all the information embedded in the barcode/QR code. 🔎

It doesn’t really matter if you scan the barcode/QR code upside down or in the landscape, ML Kit does its job well!

A few of the frequently-used information types that a barcode/QR code may have are phone numbers, email IDs, website links and WiFi information. You can find a complete list of supported information types here.

Also, keep in mind that there are many different formats for barcodes and QR codes; and ML Kit supports all of these.

Image labeling

This is one of the more fun features of ML Kit. You click a picture and let ML Kit tell you what objects are detected in the picture. 🏞

Click a pic -> Get a list of objects in the pic

If you’re using this in on-device mode, you only get access to 400+ labels, which means ML Kit wouldn’t be very accurate in detecting things that lie outside of these 400+ labels.

I’d recommend splurging on cloud mode for image labeling. You get 10,000+ labels, so your images will be labeled more accurately and object detection is more precise. 🎯

You also have the option to set the number of results to get per call to the API and what the threshold for the object detection should be.

Landmark recognition

I’m not entirely sure how often this feature would be used, but here we have it. This feature of ML Kit lets you take a picture and detect a landmark that’s in the picture. 🗼

Click a pic -> Get landmark in the pic

When you make a call to the ML Kit API for this feature, you can get the name of the landmark and the location coordinates for the same.

Language detection

This is a nifty feature for those who are working on multi-lingual feature-based apps. 📝

The language detection feature of the ML Kit API lets you detect which language a particular text is in.

Pass text to ML Kit -> Get detected language in the text

This supports over 100 languages, including Hindi, Arabic, Chinese and many more!

Find the entire list of supported languages here:

ML Kit language identification: supported languages | Firebase

Edit descriptionfirebase.google.com

Smart reply

This is the latest addition to ML Kit’s list of features and I find this one in particular very cool! ✉️

The idea here is that you pass the messages being sent to you from another user to ML Kit, and in return, ML Kit provides you with 3 smart replies that you can use to reply back to that user.

Pass messages to ML Kit -> Get 3 smart replies

Based on the recent history of your conversation with the other user, ML Kit will recommend 3 replies to you.

You may have already seen this feature in LinkedIn and the Android Messages app.

Smart replies on LinkedIn

Smart replies on LinkedIn

Using custom TensorFlow Lite models

This is for those of you who are already experienced with ML development.

This option lets you add TensorFlow Lite models to ML Kit and use them. ☁️

You can either include these models along with your app or host them with the help of Firebase.

Conclusion

This was a quick walkthrough of all the features in Firebase ML Kit. If you haven’t explored ML Kit yet, I urge you to get started with it today. Build a simple app just to play around with it and check out how it works! 😉

Here are all the articles in my ML Kit series:

“OK, ML Kit, how smart are you?”

*An intro to ML Kit and why you should learn more about it.*medium.com

Recognizing Text with Firebase ML Kit on iOS & Android

*A practical guide on implementing the text recognition feature with Firebase ML Kit.*medium.com