This is part 2 of the Introduction to Docker, and the article will introduce some useful docker tools and services as well as present the reason you should give Docker a try in 2015.

Since Docker’s 1.3 release, it is much easier for beginners to get started on Docker. What’s more, there are many useful tools and services that really makes your local development easier, and these tools and services are a big reason why 2015 is a good year to try Docker out.

Prerequisites to Learning Docker

I think the bar to get started with Docker is lower than what most people think. I run a lot of Docker workshops to teach people how to use Docker for their first time, and when people ask me if there’s a prerequisite for those workshops, I usually say the only prerequisite is that you have to have at least some familiarity with running and deploying software on production. If you don’t, the whole concept of boxing up your application in your container to move some place else won’t really make sense.

However, if you have such experience, I think it will be really easy for you to get excited about Docker, because you can think about all the times when you have some code work on your development machine only to blow up when it hits production and you have no idea why.

With Docker, it’s easier to have a consistent environment between your development and production. Thus, as far as how much effort it takes to actually get started with Docker is less than what most people expect. On my 2 hour tutorial on O’Reilly, we start all the way from hello Docker to end with building a custom Dockerfile for a Python app and deploying it to a DigitalOcean server. We’d also do a code change in the app, deploy the code update, and then do a infrastructure change and deploying that.

Of course, it may take a little longer for you to get started when Docker on your own, but it’s still a lot easier than it was a year ago.

Boot2Docker

Boot2Docker is one project Docker really enhanced last fall. Although Docker can only run natively in Linux, Boot2Docker is the way you’d install Docker on a Mac or Windows machine. You’d have to install a tiny Linux VM to help you run Docker, but Boot2Docker added a key feature on 2014 that allows you to share your volumes between the virtual Boot2Docker machine and your host.

This makes it much easier for you to do local development with Docker on a Mac or Windows machine, because you won’t have to rebuild your Docker images every time your code changes. Your Docker container can see new code changes, and this really removed a big impediment for developing quickly with Docker on a Windows or Mac machine.

The Docker Library

The Docker Library was another big thing that came out on the latter half of year 2014. The Docker Library is a set of images for popular programming languages and other server appliances that come pre-configured in a very Docker-friendly way, with everything already installed for you.

For example, if you’re trying to wire up your Python application to use Docker, you can use one of the Docker library images where they’ve already installed Python for you. They also provide some nice little hooks, where your code will have a requirements.txt file that contains all the Docker instructions that would install the requirements in your Docker container.

Docker library images make it especially easy to get started on simple software applications, and you’ll really appreciate it because you won’t have to figure out all the commands for installing Nginx, Postgres, mySQL, service components, etc. Everything would be “Dockerized” for you, and all you need to type some command line like docker pull postgres, and it will pull down the fully dockerized image for you.

Even better, Docker and the companies behind the specifics of an image keep the images up-to-date, so you don’t need to worry about having to make sure you’ve applied the latest security updates. All in all, I think the Docker library was a huge win for Docker.

GUIs

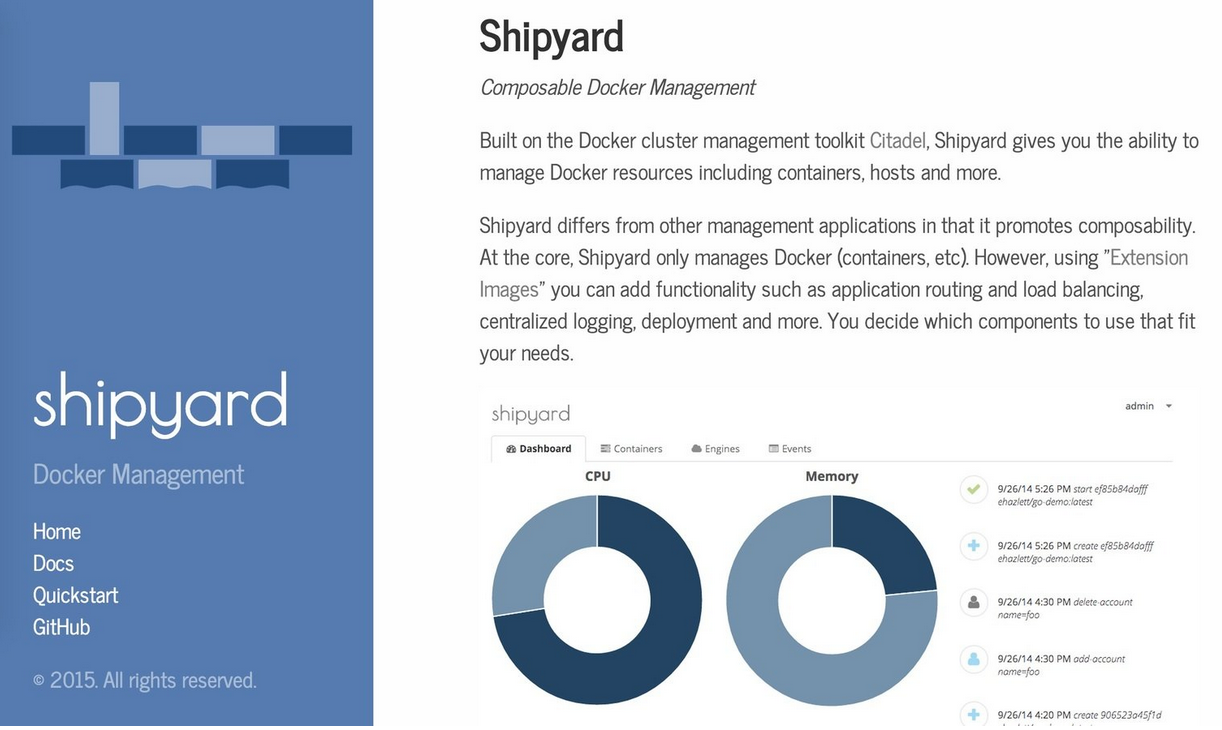

If you’re looking for a little more user-friendly insight on how your Docker containers are running once they’re out in production, you can grab a GUI for it. Shipyard is a nice project to satisfy that need.

Container-Specific Hosting

A new breed of Docker container-specific hosting is coming out from all the big cloud providers. With container-specific hosting services, you can just bundle up your app using Docker and drop it in one of the services. You won’t have to worry about installing Docker somewhere or about making sure whether the VM it runs on is locked down and secure.

Amazon is working on its EC2 container service, which is still in preview right now, but it will make things one step easier to deploy your containers to the cloud. The EC2 service will sort of remove the burden of having to actually start an EC2 instance, install Docker, and manage a VM that isn’t doing much except for running Docker for you.

Google has a similar offering called the Google Container Engine, which is also in its alpha stage, but you can probably try it right now. Microsoft Azure is also predging to add Docker support its cloud. At the moment there is a plugin in Azure that makes it easier to run a Linux machine with Docker installed, but Windows and Azure are trying to make it easy to run straight Linux containers on their platforms as well as new technologies they’re working on, which will be called the Windows Server Container.

Cloud-Agnostic Hosting

Tutum is a Docker-container specific hosting and also one reason Docker is really a great thing to look at for your organization in 2015. One cool thing about containers is that, once you locally package up your application of a Docker container, you have a high degree of confidence that software app is going to behave the same no matter where you decide to deploy your container in the cloud. Tutum makes it easy, as you can tell Tutum how and where you want to deploy your software app. For example, if you want to connect a database to an app server and connect your cache to the app server/load balancer, etc. Once you’ve configured these things, Tutum will make it exceptionally trivial to switch between cloud hosting providers. Choosing which one you want to deploy to is as easy as making sure your API key for those services are in Tutum’s dashboard, and then you can just choose how many servers you want on each service.

All in all, Tutum makes it easy to move your entire software stack between clouds.

Cloud Computing Average Monthly Cost

As mentioned in the first part of this post, containers are cool because they give you flexibility to move between different cloud computing stacks and hosting providers.

Source: RBC Capital / Business Insider

Source: RBC Capital / Business Insider

This chart was from RBC Capital, and it graphs out monthly cost per Gigabyte of RAM from all the big cloud providers. You can see almost all of them had big price drops last year – AWS still has about 75% of the computing power across the industry, but it’s clear we’re starting to see more competition in the industry.

Containers open up the possibility of switching providers not only because of price, but for several other reasons:

Service Outages

I’ve been consulting organizations dependent on AWS, and when AWS has some sort of outage or inconsistency, their ability to host your web app will not be so great. However, all you can do for the most part is to bite your nails and wait until it’s all over.

If you have a fully containerized stack and use a service like Tutum, you can imagine where it’s not difficult to have a minimum replica of your stack on a different cloud hosting service until the outage is over to keep customers happy.

Performance

Right now, cloud computing providers make it easy to compare things such as RAM, but CPU/Network/IO performance are harder to get more consistent measurements on, since a lot of times they can depend on what people call “noisy neighbors”. For example, you can have a virtual app on AWS while someone else also has an app on the same server. If they’re having a busy sales day, your website might see some performance issues as a result. So, it would be cool if you could establish little metrics for your stack that would monitor the CPU, and if you could move your stack on some other server if the performance falls below a certain criteria

Special Pricing

This is my crazy futurist dream, but as cloud computing business becomes more competitive, they could have some special pricing tailored for the kinds of usage for their service, and you can hop over to their server if they have a particularly good situation for you.

Why 2015 is going to be a great year for containers and you

There’s a new player in the mix: CoreOS is building a container runtime engine called Rocket. It’s still in a really early stage, but it’s positioning itself as an alternative to Docker, specifically as a security-oriented alternative. I think what will happen in the end is we would have a nice, healthy container ecosystem where both of these projects exist and have good followings, and most people will encounter both in their career.

As most of my professional experience is as a consultant, I can easily see a scenario where if I’m working in an especially security-conscious organization, I’d recommend using Rocket instead of Docker (depending on how Rocket’s development shakes out). For now, however, if you’re trying to use containers in production, Docker is still the only game in town.

Furthermore, CoreOS still make it clear they plan to make CoreOS the best place to run Docker. So, I wouldn’t worry too much about Docker being harder to use on CoreOS if that’s what your organization is using.

What’s Next?

In 2014, it seemed as though Docker’s main focus was to get 1.0 production ready and to establish as many partnerships as possible to make it easier to incorporate Docker into your infrastructure. In v.1.3 they also rounded up some rough edges that really frustrated beginners. Again, if you’re a beginner who tried Docker before its 1.0 release or shortly after the release, I would recommend giving Docker another shot, as it will be a bit easier to get acquainted with.

In 2015, Docker should be focusing on making Docker easier to use. They need to popularize best practices for scaling – although lots of startups and organizations are using Docker, there needs to be more large-scale and real-business applications before larger corporations with lots of IT infrastructure take it up.

Furthermore, they should also be adding additional tools to the Docker ecosystem to make it easier to take Docker from your local development machine and deploy it on the cloud. This way, you won’t have to write a bunch of your own deployment scripts. However, a lot of Docker’s partners already provide this sort of service, so Docker has pledged not to cannibalize their own ecosystem for themselves. Instead, they will be trying to provide the basics people need in order to use Docker to complete a sort of development work flow.

Finally, Docker’s focus in 2015 would also include addressing security issues, as it has been one of the things people are concerned about.

Codementor Andrew T. Baker is a software developer based in Washington D.C. In 2014, he produced O’Rielly Media’s first Docker offering: an Introduction to Docker, which is a video tutorial. Andrew is presented at meetups and conferences to demystify Docker to developers and sysadmins alike. He has worked as a full-stack Python developer for most of last year and took a break last fall to pursue his interests. Since then he has been working on Node.js, AngularJS and the Ionic framework. He blogs at AndrewTorkBaker.com, and his twitter handler is @andrewtorkbaker. Finally, his Docker Hub account is atbaker.